Maryland research contributes to Google’s AlphaGo AI system

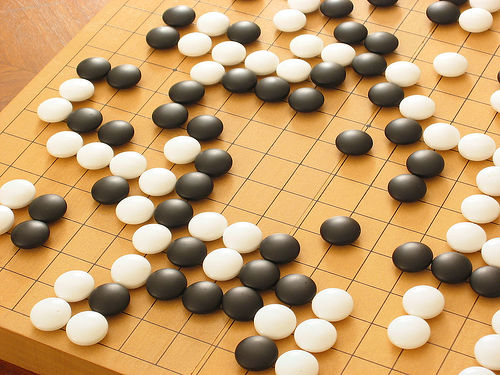

Ideas first explored by four University of Maryland researchers in a 2005 paper are at the core of AlphaGo, Google’s Go-playing artificial intelligence (AI) system. AlphaGo defeated Lee Se-Dol, one of the top Go players in the world, in early March, by a score of four games to one. Last fall, the AI system also defeated Fan Hui, Europe’s highest-ranked professional Go player, 5–0. AlphaGo is the latest in the line of AI systems developed to beat humans at games like checkers, chess, Scrabble and Jeopardy. Each successive challenge extends the boundaries of machine learning and its capabilities. Go is a 2,500-year-old game popular in east Asia, where players take turns placing black or white stones on a board, trying to capture their opponent’s stones or surround empty space to make points of territory. An average 150-move game contains 10170 possible board configurations, a level of complexity that presented entirely new challenges for computer programmers. They could not rely on the traditional method of constructing a search tree that covers every possible move, looking for the best one. A different approach that could recognize patterns in the game was needed. AlphaGo is powered by a combination of both machine learning and new Monte Carlo tree search techniques, guided by a “policy network,” which selects the next move, and a “value network,” which predicts the winner of the game. Both networks are implemented using brain-inspired deep neural network technology with millions of connections. AlphaGo couples these techniques with extensive training, both by observing human play and by playing itself in extensive trials. An algorithm that balances exploitation and exploration in estimating the value of possible actions by Monte Carlo simulation for sequential decision making problems under uncertainty was first proposed by a quartet of University of Maryland researchers. Institute for Systems Research (ISR) Postdoctoral Researcher Hyeong Soo Chang,* Professor Michael C. Fu (Smith School/ISR), Electrical and Computer Engineering (ECE) Ph.D. student Jiaqiao Hu,** and Professor Steven I. Marcus (ECE/ISR) collaborated on the paper, “An adaptive sampling algorithm for solving Markov decision processes.” It appeared in the January-February 2005 issue of the journal Operations Research. “Our paper was the first to apply upper confidence bounds to Markov decision processes by generating so-called Monte Carlo trees,” Fu says. The next year, Rémi Coulom coined the term “Monte Carlo tree search” and described how the Monte Carlo method could be applied to game-tree search in the paper, “Efficient Selectivity and Backup Operators in Monte-Carlo Tree Search.” Also in 2006, Levente Kocsis and Csaba Szepesvári developed an algorithm based on Monte Carlo tree search with upper confidence bounds as applied to games in their paper, “Bandit based Monte-Carlo Planning.” The two papers were the impetus for tackling the AI problem in Go. “Basically, the two main papers that introduced Monte Carlo tree search for games cited our paper as having the main seeds for their algorithms,” Fu says. “Interestingly, our paper has only 72 citations in Google Scholar, whereas these two ‘children’ articles have 1370 and 512 citations.” The March matchup of AlphaGo versus Lee Se-Dol was yet another watershed moment for modern artificial intelligence. The techniques used by AlphaGo have implications for other tasks that require recognition of complex patterns, long-term planning and decision-making. Applications include image and speech recognition, natural language processing and robotics. Learn more about AlphaGo in this Google video: * Hyeong Soo Chang is a professor in the Department of Computer Science and Engineering at Sogang University in Seoul, South Korea. ** Jiaqiao Hu is an associate professor in the Applied Mathematics and Statistics Department at Stony Brook University.

Related Articles: March 18, 2016 Prev Next |