Clark School Researchers Help Decode Speech Recognition

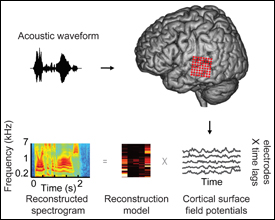

Clark School Professor Shihab Shamma (electrical and computer engineering/Institute for Systems Research [ISR]), former ISR postdoctoral researcher Stephen David*, and alumnus Nima Mesgarani** (Ph.D. '08, electrical engineering) are three of the authors of a new study on how the human auditory system processes speech, published in the Jan. 31, 2012, edition of PLoS Biology. ”Reconstructing Speech from Human Auditory Cortex” details recent progress made in understanding the human brain's computational mechanisms for decoding speech. The researchers took advantage of rare neurosurgical procedures for the treatment of epilepsy, in which neural activity is measured directly from the brain’s cortical surface—a unique opportunity for characterizing how the human brain performs speech recognition. The recordings helped researchers understand what speech sounds could be reconstructed, or decoded, from higher order brain areas in the human auditory system. The decoded speech representations allowed readout and identification of individual words directly from brain activity during single trial sound presentations. The results provide insights into higher order neural speech processing and suggest it may be possible to readout intended speech directly from brain activity. Potential applications include devices for those who have lost the ability to speak through illness or injury. Brian N. Pasley, Helen Wills Neuroscience Institute, University of California Berkeley is the paper’s lead author. In addition to the Clark School-affiliated co-authors, additional co-authors include Robert Knight, University of California San Francisco and University of California Berkeley; Adeen Flinker, University of California Berkeley; Edward Chang, University of California San Francisco; and Nathan Crone, Johns Hopkins University. * Stephen David is now an assistant professor at Oregon Health & Science University, where he heads the Laboratory of Brain, Hearing, and Behavior in the Oregon Hearing Research Center. ** Nima Mesgarani is currently a postdoctoral researcher in the Neurological Surgery Department of the University of California, San Francisco School of Medicine. | Read a story about this research in USA Today |

Related Articles: February 6, 2012 Prev Next |