UMD Researchers Eye Advances in Autonomy

On a quiet peninsula formed by the Potomac River and the Chesapeake Bay, the future of aerial autonomy is taking shape, with University of Maryland UAS Test Site engineers and pilots testing research that promises to yield major advances. The Test Site team is part of a massive collaborative endeavor—dubbed AI and Autonomy for Multi-Agent Systems (ArtIAMAS)—that brings together experts from UMD, University of Maryland Baltimore County (UMBC), and the Army Research Laboratory (ARL). Led by Maryland Robotics Center director and aerospace engineering professor Derek Paley, ArtIAMAS sets its sights on the most challenging “holy grails” in AI, autonomy, and robotics. To achieve their ambitious goals, researchers are leveraging facilities and resources that include not only the Test Site itself but the adjacent University System of Maryland at Southern Maryland (USMSM)’s newly-opened SMART Innovation Center, which boasts an 80’ by 60’ air-land lab with an amphibious pool, as well as outdoor space for ground and air vehicle testing. “Between the Test Site, reachback to the main UMD campus and partners, and the SMART center, our mix of expertise, resources and facilities adds up to a dream come true for autonomy researchers,” said UMD UAS Test Site Director Matt Scassero, who is also the A. James Clark School of Engineering’s lead for research, innovation and outreach at USMSM. “And all this has come about as part of a plan to create an innovation hub in St. Mary’s County, Maryland, boosting the regional economy while providing UMD engineers with the resources they need to achieve dramatic breakthroughs.”

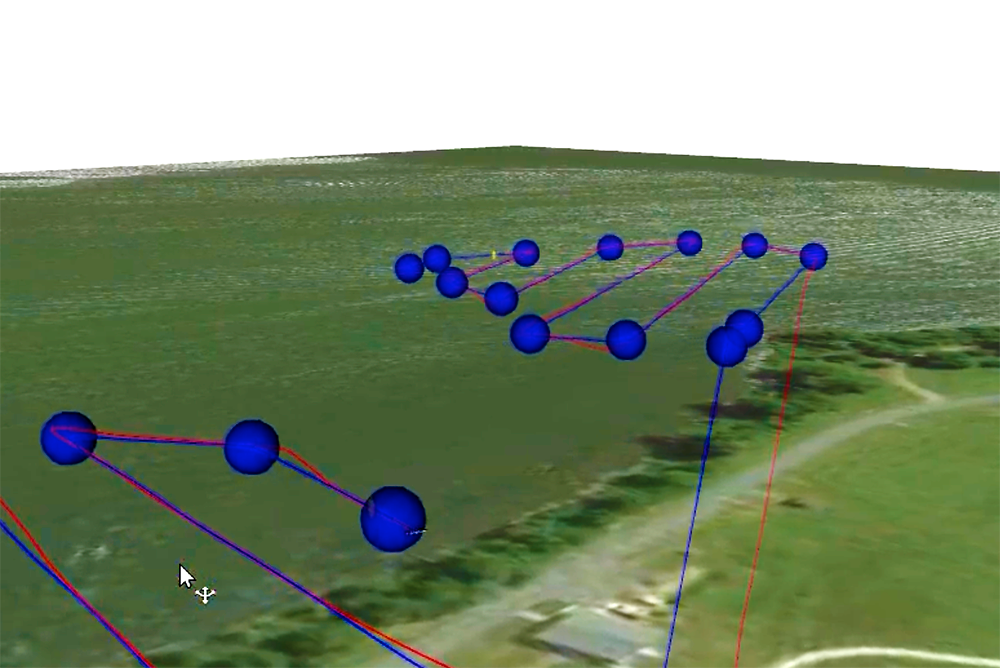

In one ArtIAMAS project involving the Test Site, researchers are using machine learning to help drones perceive human action more precisely—for example, by distinguishing whether a human far below is reaching for a weapon, or simply using their cell phone. Smart drones use algorithms—which, taken together, form a neural network—to help them make such distinctions. But as Paley explains, “neural networks require an incredible amount of data to train and to be able to perceive objects. By using thousands of images that are annotated, the algorithms can be trained to detect specific objects and actions.” That’s one of the ways in which the UMD UAS Test Site can help. Paley and a doctoral student, Wei Cui, have been using Unity, a platform favored by video game developers, to simulate drone flights, but the platform relies on fictitious imagery that bears only a partial resemblance to physical environments. To add more reality to the mix, the Test Site team is conducting outdoor flights at a local farm and will soon start indoor ones at the SMART center, collecting hundreds of hours’ worth of real-world imagery and data that can be used to sharpen a machine’s perceptual skills. The information will then be fed into the search algorithm being developed by Paley and Cui, for use in an Army platform known as Mavericks. The platform is intended to support autonomous drones that are capable of working together and making their own decisions, without human intervention or even without GPS support. Keeping Ground Robots on the Right Path While Paley tackles the complexities involved in creating intelligent drone swarms, his colleague Dinesh Manocha is honing in on another thorny robotics problem: teaching robots how to navigate uneven or obstacle-laden terrain. Manocha, a Distinguished University Professor with joint appointments in UMD’s computer science and electrical and computer engineering departments, is an ArtIAMAS co-PI who leads one of three research thrusts defined under the project. A fellow of the Association for the Advancement of Artificial Intelligence (AAAI), he has been working on robotics and navigation technologies for three decades. “Army operations often involve complex terrain,” Manocha said. “If robots are being deployed in such environments, then they have to be able to find their way around such terrain in autonomous manner using on-board sensors. Otherwise, they’re going to get stuck or damaged, and unable to complete their tasks.” His group’s recent work combines techniques from computer vision and machine learning to navigate in complex indoor and outdoor scenes with ground robots. A potentially even more powerful approach is to combine the ground and aerial robots, with drones flying overhead and communicating information to their terrestrial counterparts. “The drones can assess the landscape below and relay this information to the ground robots, thus helping them navigate,” Manocha said. “They can tell a robot ‘don’t go that way–there’s a rock.’” Finding the right location to test such a hybrid system isn’t easy: it requires facilities and personnel that can support simultaneous testing of ground-based and aerial unmanned systems, preferably in both indoor and outdoor environments. Between the Test Site and the SMART center, Manocha and his students now have the testing environment they need for future research in this area. “This incredible indoor/outdoor space enables us to carry out kinds of research that were not feasible before,” Manocha said. “We anticipate being able to make significant progress.” Only The Beginning Now in its second year, the ArtIAMAS program has received more than $13 million in funding to date, with the total amount over five years expected to surpass $68 million. The program’s aerial autonomy projects are quickly ramping up in scale, as foundational work–including the collection and annotation of data–is completed. “We’re going to be doing some very exciting things in the months ahead,” Scassero said. “This is one of those exceptional moments where the mix of people, facilities, and resources is in place to really make things happen. And we at the Test Site are thrilled to be part of it.”

Related Articles: April 11, 2022 Prev Next |

|

Enhancing Drones’ Perceptual Skills

Enhancing Drones’ Perceptual Skills