Maryland researchers awarded $1M DARPA Lagrange program cooperative agreementA team of four University of Maryland researchers has been awarded a $1M, 18-month cooperative agreement by the Defense Advanced Research Projects Agency (DARPA) for “An Optimization-Based Approach to Breaking the Neural Code.” The project is part of DARPA’s Lagrange program. The principal investigator is Professor Steve Marcus (ECE/ISR). He is joined by three co-investigators: ISR-affiliated Assistant Professor Behtash Babadi (ECE), Professor Michael Fu (BMGT/ISR) and Professor Jonathan Simon (ECE/Biology/ISR). Recent technological advances in acquiring neural data from the brain—from magnetoencephalography (MEG) and electroencelphalography (EEG) to two-photon imaging and high-density micro-electrode arrays—have resulted in an abundance of large neural data sets. These sets span different spatial scales, time scales, conditions, and recording methodologies. In particular, the high temporal resolution and noninvasive nature of MEG/EEG neural recordings in human subjects opens a unique window of opportunity to decipher the complex dynamic cortical interactions underlying sophisticated behavior, and break the so-called “neural code.” Before the potential of these data sets can be exploited, however, we first require computationally efficient and robust analysis techniques capable of capturing the dynamics and statistical characteristics of the recorded brain activity. Current modeling and estimation paradigms face many challenges in using such high-dimensional neural data. Current model-based approaches to neural system identification require fitting high-dimensional models to neural data; yet existing approaches do not scale favorably with the now-ubiquitous high dimensionality of current data sets, which may span hours of data from hundreds of sensors. Also, emerging applications of neuroimaging in the fields of brain-computer interface (BCI) and neural prosthetics require robust, real-time processing of the neural data, whereas existing robust techniques are primarily designed for batch-mode operation, and are not well suited for real-time processing. In addition, the time-sensitivity and high-accuracy requirements of such applications typically demand risk-sensitive optimization procedures with optimality guarantees, which are lacking in currently available neural data analysis techniques. And existing dimensionality reduction techniques are off-the-shelf, taken from unrelated disciplines such as image processing; these techniques do not capture the geometric/topological organization upon which cortical function is based. Finally, existing techniques are driven by linear estimation paradigms suitable for Gaussian data; the combined non-linearity and non-Gaussianity of cortical activity prevent these techniques from capturing the true stochastic properties of brain function. Despite the wide usage of optimization techniques in the analysis of neural data, no mathematically principled optimization paradigm for modeling and identification of cortical function with statistical robustness exists. The researchers will develop an innovative optimization framework tailored to noninvasive neuroimaging data from the human brain that is scalable, risk-sensitive and real time, and is capable of capturing the stochastic and geometric features unique to cortical function. This framework also will be formulated in a way that will generalize easily to other application areas. The team will work on four tasks: risk- sensitive higher-order inverse solutions, fast real-time algorithms for non-convex maximum a posteriori probability (MAP) problems, an information geometric approach to dimensionality reduction for neural data, and the application of all of these to auditory MEG experiments.

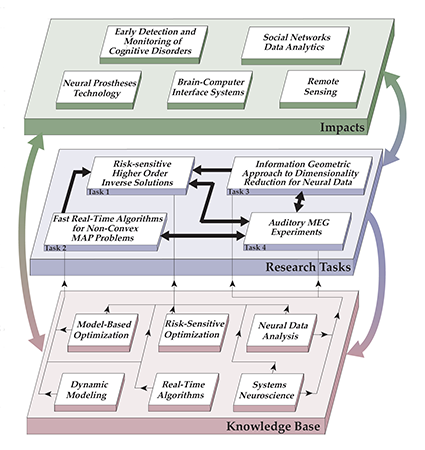

Image: The research will build up on the knowledge base from several disciplines (bottom plane) and provides an integrated research plan (middle plane) leading to game-changing scientific and technological impacts (top plane). As the graphic above shows, techniques will be integrated from various domains including model-based optimization, risk-sensitive optimization, dynamic modeling, real-time algorithms, neural data analysis and systems neuroscience to create an optimization-based paradigm for addressing challenges in brain science. The tasks are synergistically interconnected: real-time algorithms as well as the dimensionality reduction techniques can help speed up the inverse problems; dimensionality reduction techniques will be employed in the analysis of our experimental data; application of the algorithms to real data will not only be used to address the neuroscience questions, but also will serve as feedback to improve modeling and optimization techniques (middle plane). Because they are grounded in well-established theoretical domains, the methods will apply to a variety of problems in other domains due to being (bottom plane). Thereby, they will apply to immediate cross-domain applications of brain-computer interface systems, neural prostheses, and detection and monitoring of cognitive disorders, as well as problems in social networks data analytics and remote sensing in which dynamic, structured, high-dimensional data need to be processed in a robust and scalable fashion (top plane). About the Lagrange program DARPA’s Lagrange program seeks to develop new mathematical approaches to optimization problems in uncertain, dynamic, multiscale, and high-dimensional settings. By bridging methodologies developed for both discrete and continuous optimizations, Lagrange aims to enable solutions for complex, realistic problems that involve dynamic environments, rapidly changing requirements, and increasing or decreasing amounts of information. In particular, Lagrange will address the fact that many applications of interest today are posed as non-convex optimization problems and thus remain intractable despite significant recent theoretical and algorithmic progress in convex optimization. Lagrange seeks methodologies beyond current convex relaxation methods to advance scalability of algorithms; data-driven approaches that explore proper sampling of data sets; and computationally tractable methods of approximating distributions. The program aims to produce new mathematical frameworks and solution methods for large-scale optimization of complex systems, and algorithms that could be implemented on computing platforms that would use parallelizability and scalability.

Related Articles: April 10, 2018 Prev Next |